This blog post offers a few simple tricks and tips that will ensure that your security controls do not interfere with each other. The tricks are not really tricks, just plain old configurations which offers food for thought. As we know, Splunk is the most complex beast of a software out there.

The dreaded license consumption

One aspect of Splunk is (sadly) always optimizing for license. Optimizing should in any context not mean to blindly and without serious thought leave anything relevant outside of your logging scope. Usually, once you are up and running, license usage normalizes and stays at a manageable level without any surprises (except for the occasional process that goes mad once per year and logs a gazillion bytes). There are situations though where you make an otherwise controlled and planned change, which should not affect anything. And then it still does. One of these is rolling out a seemingly simple small software to your servers.

In this blog I will cover two external controls (Defender ATP and an external vulnerability scanner) which will affect your Splunking and license consumption, but can be handled in an elegant way without compromising logging and visibility.

Introducing Microsoft Defender ATP for Linux

If you are not familiar with the fact that Microsoft has an excellent tool for EDR and endpoint protection, get familiar with it. This is truly the first tool that brings the same level of visibility into Windows, Mac and Linux environments. Not 99% of the features on Windows and 1% of the features (for marketing purposes) on the other platforms. This blog is not about the excellence of Defender, but let’s just quickly have a look at what we can see and monitor.

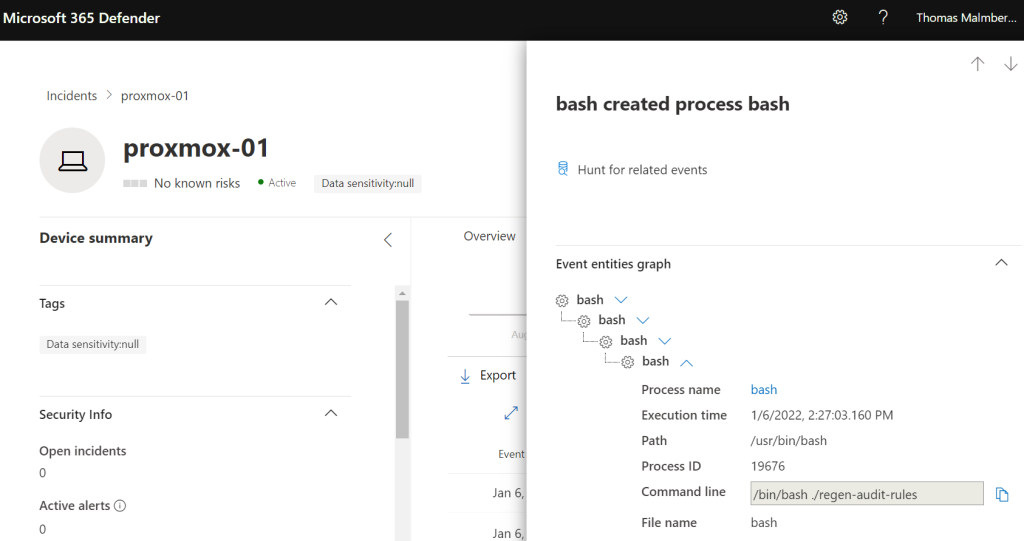

In the above screenshot we can see that I have just regenerated the auditd rules as part of this excercise. This is just one example on the level of visibility we get into the operating system.

Read more about Defender and installing it here: https://docs.microsoft.com/en-us/microsoft-365/security/defender-endpoint/microsoft-defender-endpoint-linux.

The strategy for installing Defender on Linux should be planned ahead. In our case, the chosen strategy was to run Defender on the virtualization platform (Proxmox), but NOT on every virtual machine. As a sidenote, my experience is that on Proxmox (Debian essentially), the CPU overhead of running Defender is about 2%. This analysis is just by looking at the CPU consumption graphs before and after. However, if you experience performance issues with Defender on Linux (and you may), have a look here https://docs.microsoft.com/en-us/microsoft-365/security/defender-endpoint/linux-support-perf.

Prerequisites for setting this up

The virtualization platform is running Defender and a Splunk Forwarder with the Splunk Add-on for Unix and Linux (https://splunkbase.splunk.com/app/833/) installed. In addition, auditd is installed as this (not surprisingly) is a prerequisite for Defender itself. The forwarder configuration is not really important, as long as you are ingesting the auditd logs into Splunk. The Splunk indexers must have the Linux Auditd Technology Add-On (https://splunkbase.splunk.com/app/4232/) and your Search Heads must have both the Technology Add-on as well as the Linux Auditd App (https://splunkbase.splunk.com/app/2642/) installed.

We are ready to go.

Defender uses auditd.rules to support its functionality

Once Defender is installed, we can observe that it actually creates it’s very own auditd ruleset for logging. And boy is this ruleset noisy. I mean, really noisy. Usually one could approach noisy auditd by modifying the auditd.rules configuration itself, but as Defender is using this information, we cannot approach the problem (solely) this way.

-a exit,never -F arch=b64 -F pid=11396 -S 2 -S 41 -S 42 -S 49 -S 82 -S 84 -S 87 -S 101 -S 257 -S 263 -k mdatp

-a exit,never -F arch=b64 -F pid=21419 -S 2 -S 41 -S 42 -S 49 -S 82 -S 84 -S 87 -S 101 -S 257 -S 263 -k mdatp

-a exit,never -F arch=b64 -F pid=21442 -S 2 -S 41 -S 42 -S 49 -S 82 -S 84 -S 87 -S 101 -S 257 -S 263 -k mdatp

-a exit,never -F arch=b64 -F pid=21517 -S 2 -S 41 -S 42 -S 49 -S 82 -S 84 -S 87 -S 101 -S 257 -S 263 -k mdatp

-a exit,never -F arch=b64 -F pid=21632 -S 2 -S 41 -S 42 -S 49 -S 82 -S 84 -S 87 -S 101 -S 257 -S 263 -k mdatp

-a exit,never -F arch=b64 -F pid=21656 -S 2 -S 41 -S 42 -S 49 -S 82 -S 84 -S 87 -S 101 -S 257 -S 263 -k mdatp

-a exit,never -F arch=b32 -F dir=/dev/shm -S 10 -k mdatp

-a exit,never -F arch=b64 -F dir=/dev/shm -S 87 -k mdatp

-a exit,always -F a0=10 -F arch=b64 -F success=1 -S 41 -k mdatp

-a exit,always -F a0=2 -F arch=b64 -F success=1 -S 41 -k mdatp

-a exit,always -F a2=16 -F arch=b64 -S 42 -k mdatp

-a exit,always -F a2=16 -F arch=b64 -F success=1 -S 49 -k mdatp

-a exit,always -F a2=28 -F arch=b64 -S 42 -k mdatp

-a exit,always -F a2=28 -F arch=b64 -F success=1 -S 49 -k mdatp

-a exit,always -F arch=b32 -F success=1 -S 10 -S 38 -S 40 -S 102 -S 301 -S 302 -S 353 -k mdatp

-a exit,always -F arch=b64 -F success=1 -S 43 -S 82 -S 84 -S 87 -S 101 -S 263 -S 264 -S 288 -S 316 -k mdatp

The solution is actually quite neat and straightforward. Microsoft has chosen to tag each of their auditd rules with “-k mdatp”. So we only need to filter out these keys. The elegant thing is, that whatever we have decided to configure around auditd ourselves will not interfere with the Defender configuration (as long as we are NOT using mdatp as a key AND making sure our rules are NOT in rules.d/audit.rules where Defender puts its own rules by default). Overall we can conclude that this is good thinking and planning by Microsoft.

Customizing our Splunk configuration

Customizing in our case means configuring the indexers to drop the audit logs that Defender needs before indexing them into Splunk. Yes, this means all the data is forwarded to the indexers, but this I decided to accept as a cost of doing business. You could install Heavy Forwarders on the Linux server but I decided that this creates a bit of overkill in that part of the configuration. Enter props.conf and transforms.conf – in the Linux Auditd Technology Add-On on the indexers.

A personal note and a point of grudge for me: Something that every properly engineered Splunk TA should come configured with out of the box is an empty (disabled) nullQueue. But they do not, so now we create one.

I copied over props.conf and transforms.conf as such from default to local as a starting point.

[root@master-01 local]# pwd

/opt/splunk/etc/master-apps/TA-linux_auditd/local

[root@master-01 local]# ls -la

total 12

drwxr-xr-x. 2 root root 47 Jan 2 00:40 .

drwxr-xr-x. 7 root root 108 Dec 10 22:33 ..

-rw-r--r--. 1 root root 4935 Dec 10 22:44 props.conf

-rw-r--r--. 1 root root 3059 Jan 2 00:40 transforms.conf

Under the [linux_audit] stanza, add the necessary transforms-reference as highlighted.

[root@master-01 local]# vi props.conf

[linux_audit]

TRANSFORMS-linux_audit = linux_audit

TRANSFORMS-null = setnull

We configure the nullQueue with a simple regular expression that filters out every line with the key=”mdatp”. Note that I also decided to filter out comm=”splunkd” at this point. I decide to trust Splunk implicitly in this case and not log anything related to this. Just add the [setnull] stanza to the end of transforms.conf as highlighted.

[root@master-01 local]# vi transforms.conf

[setnull]

REGEX = (comm="splunkd"|key="mdatp")

DEST_KEY = queue

FORMAT = nullQueue

Some systems may still require additional auditd exclusion rules

As auditd is a complicated organism, just filtering on the key provided by Microsoft is rarely enough. As it happens, the auditd ruleset for Defender trigger a lot of logging that is lacking the key. This though, depends very heavily on what the system is you are running. As my example is Proxmox – a virtualization system – there is some heavy activity going on all the time everywhere in the system. This is of course completely normal. Because it is normal, we can (without compromising security too much) also filter a bit more on the auditd-level. While writing this blog I ran into this other excellent blog by Eric, which you can find here: https://www.ericlight.com/microsoft-defender-for-endpoint-mdatp-on-debian-sid.html. This blog (while it so happens is about Defender and Proxmox as well!) has huge value as it has concrete examples on how to ANALYZE and ACT on high logvolume processes in the system. By using the cat’s and grep’s in the mentioned blog, I was able to come up with a decent list of “really normal activity” that I now exclude. Again, resulting in cutting down excess logging.

This is my example exclusion-list (which is similar but not at all identical to the on in Eric’s blog). Your mileage will most definitely vary on this one, both technically as well as tactically.

-a never,exit -S 43 -S 84 -S 263 -F exe=/usr/bin/pmxcfs -k exclude_PVE_internals

-a never,exit -S 288 -F exe=/usr/bin/qemu-system-x86_64 -k exclude_PVE_internals

-a never,exit -S 87 -F exe=/usr/sbin/lvm -k exclude_PVE_internals

-a never,exit -S 41 -F exe=/usr/sbin/xtables-legacy-multi -k exclude_PVE_internals

-a never,exit -S 41 -S 54 -F exe=/usr/sbin/ebtables-legacy-restore -k exclude_PVE_internals

-a never,exit -S 41 -S 54 -F exe=/usr/sbin/ebtables-legacy -k exclude_PVE_internals

-a never,exit -S 288 -S 41 -S 42 -S 82 -S 84 -F exe=/usr/bin/perl -k exclude_PVE_internals

-a never,exit -S 59 -F exe=/usr/bin/dash -k exclude_PVE_internals

-a never,exit -S 41 -S 42 -S 82 -S 84 -S 87 -F exe=/opt/splunkforwarder/bin/splunkd -k exclude_PVE_internals

-a never,exit -S 288 -S 87 -F exe=/usr/lib/systemd/systemd-udevd -k exclude_PVE_internals

-a never,exit -S 41 -S 42 -F exe=/opt/microsoft/mdatp/sbin/telemetryd_v2 -k exclude_PVE_internals

-a never,exit -S 257 -S 41 -S 42 -S 82 -S 84 -S 87 -F exe=/opt/microsoft/mdatp/sbin/wdavdaemon -k exclude_PVE_internals

-a never,exit -S 263 -S 299 -S 82 -S 87 -F exe=/usr/lib/systemd/systemd-journald -k exclude_PVE_internals

This is now my rules.d directory ended up looking

root@proxmox-01:/etc/audit# ls -la rules.d/

-rw-r--r-- 1 root root 843 Jan 7 14:55 01-exclusion.rules <-- This is the proxmox-specific exclusion ruleset from above

-rw-r----- 1 root root 1343 Jan 7 14:18 audit.rules <-- This is by Microsoft

-rw-r--r-- 1 root root 20529 Jan 7 14:20 mintaudit.rules <-- This is our custom auditd file

Review the results

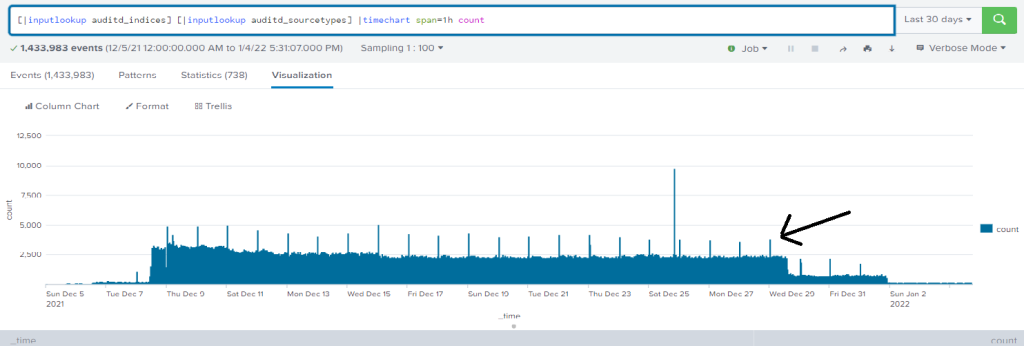

Se let’s look at the results. It is clearly visible, that the volume goes down dramatically by looking at Sunday, Monday and Tuesday. Yes, there are still logs going on, but the noise is gone. Really none of the stuff that Defender is using from its own auditd.rules is interesting at all from a log management point of view. We are happy to get rid of those log lines, and only ingest the things we have explicitly decided to log in our own custom auditd.rules (which is a topic of a different blog post altogether).

How does vulnerability scanning relate to this?

Vulnerability scanning is another security related control that creates huge volumes – volumes of requests. And those volumes also create logs in auditd.

Now that we have the nullQueue set up, we can easily add whatever we want to it! Without going too wild, the one thing that consumes license is a vulnerability scanner whose every attempt at anything is being thoroughly logged. This is again not of interest from a log management point of view – monitoring the successes and failures of the vulnerability scanner is done somewhere completely different (and thus not overlooked although we decide not to ingest those logs from the server’s point of view).

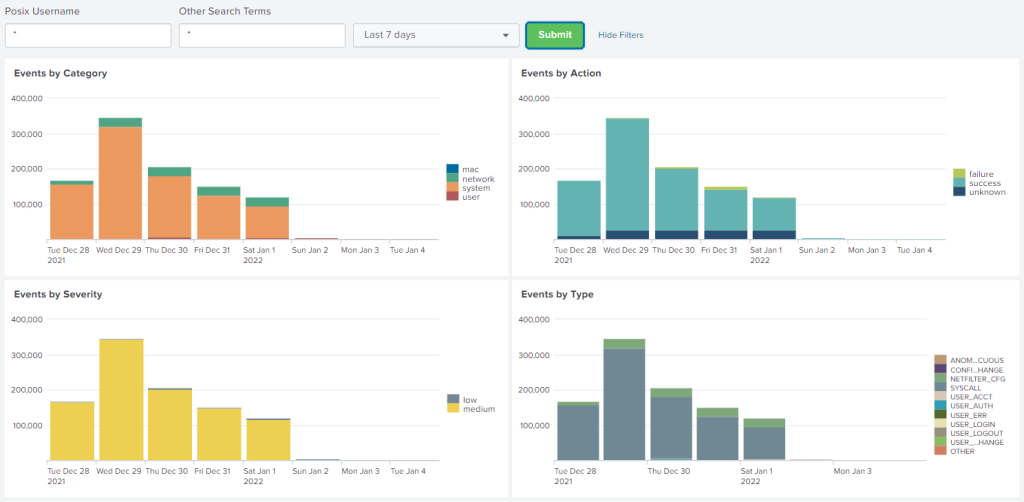

We can clearly see the spikes in ingested logs when the scanner is running. Yes, we really do complete scans of each subnet several times per week.

So, back to transforms.conf and modify the regex.

REGEX = (comm="splunkd"|key="mdatp"|ADDR=192.168.257.198)

Now each login attempt (or any other activity that auditd logs using ADDR) is left out from ingestion – which is clearly visible in the above image starting from Sunday.

I'm in the cloud - this does not apply?

Partially right. Let’s break this down a bit.

I don’t have any servers in my infrastructure

- You are correct – this really does not apply

I am using Splunk cloud

- Good for you. If you are using servers of any kind (that includes servers in the cloud, IaaS, by the way) this is an issue for you. You should deploy a heavy forwarder and apply the learnings in this blog to that instead of indexers. In addition, everything related to auditd of course applies.

I am using another cloud-based log management solution (in Azure for example)

- Good for you. If you are using servers of any kind (that includes servers in the cloud, IaaS, by the way) this is an issue for you. The cost of running a cloud bases log management solution – be it Splunk or Microsoft or something else, has surprised users. The cloud with seemingly endless capabilities, also comes with a cost. And why wouldn’t it.

Summing it up

The strategy implemented here was very clear

- Identify things that consume license

- Assess the importance of those things to your security posture

- Stop logging stuff with huge volumes of events that are completely normal

- Stop ingesting stuff that does not add to your security posture (positively) in any way while consuming license in excess

There are always things that can be optimized further. And further. And further. In the end, the cost-benefit lies in finding HUGE excessive license consumption. If you start overoptimizing your license consumption, you will eventually end up missing important events and spending a lot of time – costing you more than the few gigs you may be overconsuming.

Choose your battles.